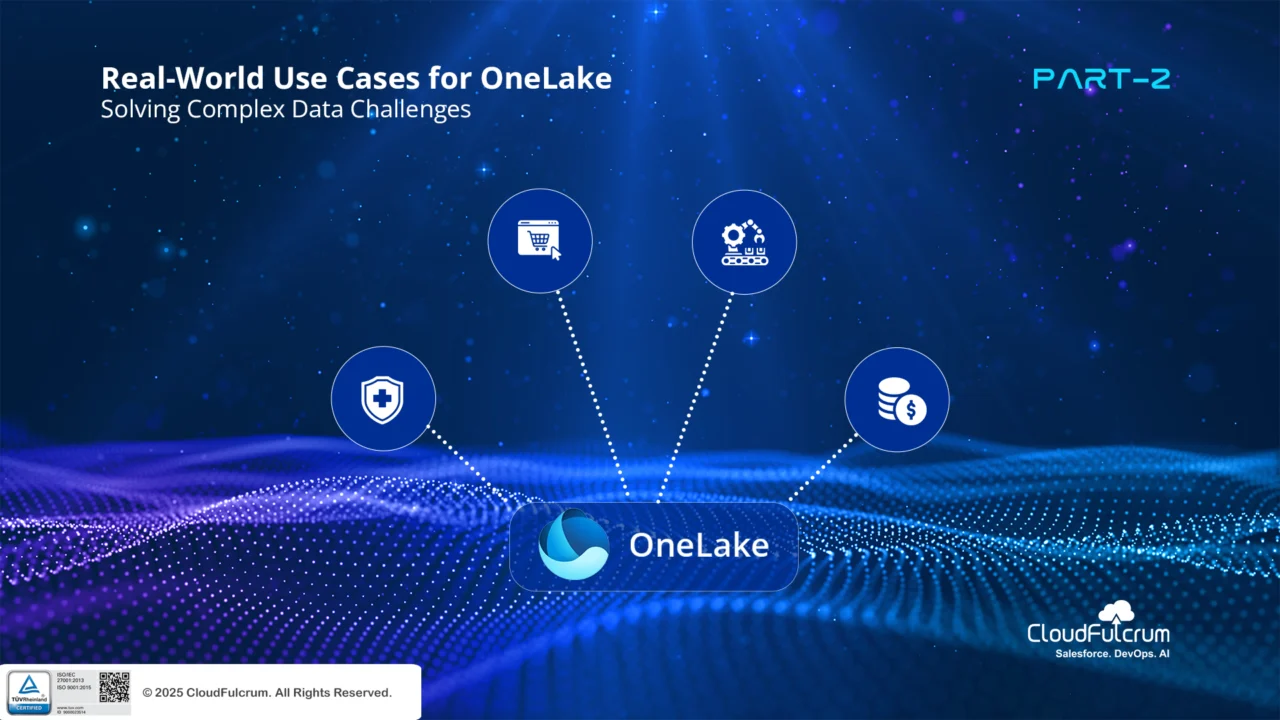

Welcome back to our deep dive into Microsoft OneLake. In Part 1, we explored the technical architecture that underpins its unified data foundation. Now, we’ll move beyond theory and examine concrete real-world use cases where OneLake is proving to be a game-changer, addressing complex data challenges across various industries. We’ll delve into the specific scenarios and the technical advantages OneLake brings to the table.

1. Streamlining Cross-Departmental Analytics in a Global Retail Organization:

- The Challenge: A large multinational retailer struggled with siloed data across its online sales, brick-and-mortar stores, marketing campaigns, and supply chain systems. Extracting a holistic view of customer behavior and operational efficiency required complex ETL processes and often resulted in data latency and inconsistencies.

- The OneLake Solution: By adopting Microsoft Fabric with OneLake as the central data repository, the retailer could ingest data from all these disparate sources into a single, unified logical lake.

-

- Technical Deep Dive: Shortcuts were extensively used to connect to existing Azure Data Lake Storage Gen2 instances housing web analytics and marketing data, as well as Azure SQL Databases managing transactional data. Delta Lake format was enforced for all transactional and analytical data within OneLake, ensuring ACID properties.

- Impact: Data analysts across different departments could now access and analyze integrated datasets without the need for data replication or complex data movement. Synapse Data Engineering was used to build robust data pipelines for cleansing and transforming data within OneLake. This enabled a unified view of customer journeys, optimized inventory management based on real-time sales data, and more effective cross-channel marketing strategies.

2. Accelerating Clinical Trial Analysis in a Pharmaceutical Company:

- The Challenge: A leading pharmaceutical company faced significant delays in analyzing data from multi-site clinical trials. Data was often stored in various formats and locations, making it difficult for researchers to collaborate and derive timely insights on drug efficacy and safety.

- The OneLake Solution: OneLake provided a secure and collaborative platform for centralizing clinical trial data.

-

- Technical Deep Dive: Data from different research sites, often in formats like CSV, Parquet, and even unstructured documents, were ingested into designated workspaces within OneLake. Robust access controls, leveraging Azure AD and Fabric workspace roles, ensured data security and compliance (e.g., HIPAA). Metadata management through Microsoft Purview allowed researchers to easily discover and understand relevant datasets.

- Impact: Data scientists using Synapse Data Science could directly access and analyze the unified data in OneLake using Spark and Python. This accelerated the analysis process, facilitated better collaboration among research teams, and ultimately sped up the time-to-market for new drugs while adhering to stringent regulatory requirements.

3. Optimizing Predictive Maintenance in a Manufacturing Plant:

- The Challenge: A large manufacturing plant generated massive amounts of time-series data from IoT sensors on its machinery. Analyzing this data to predict potential equipment failures and optimize maintenance schedules was complex due to the volume and velocity of the data, as well as its distributed nature across various plant systems.

- The OneLake Solution: OneLake served as the scalable data lake to ingest and process this high-volume IoT data in near real-time.

-

- Technical Deep Dive: Data from Azure IoT Hub was streamed into OneLake. Synapse Real-Time Analytics, leveraging KQL, was used to perform initial filtering and aggregation. Synapse Data Engineering with Spark was then employed for more complex feature engineering on historical data stored in Delta Lake within OneLake.

- Impact: Data scientists built predictive maintenance models using Synapse Data Science, training them on the unified IoT data in OneLake. These models could then be deployed to provide real-time alerts on potential equipment failures, allowing for proactive maintenance, reducing downtime, and optimizing operational efficiency. Power BI dashboards, connected directly to OneLake, provided plant managers with a comprehensive view of equipment health and predicted maintenance schedules.

4. Enabling Real-Time Fraud Detection in a Financial Institution:

- The Challenge: A financial institution needed to enhance its real-time fraud detection capabilities by analyzing transactional data, customer behavior patterns, and external threat intelligence feeds. The disparate nature of these data sources and the need for low-latency analysis posed a significant technical challenge.

- The OneLake Solution: OneLake provided a unified platform to integrate these diverse data streams for real-time analysis.

-

- Technical Deep Dive: Transactional data from Azure SQL Database, customer interaction data from CRM systems (accessed via shortcuts), and streaming threat intelligence feeds were ingested into OneLake. Synapse Real-Time Analytics with KQL was used to analyze the streaming data and correlate it with historical patterns stored in Delta Lake within OneLake.

- Impact: The financial institution could build sophisticated real-time fraud detection rules and machine learning models (using Synapse Data Science on the unified data) to identify and prevent fraudulent transactions with greater accuracy and speed, minimizing financial losses and improving customer trust.

Conclusion:

These real-world examples illustrate the transformative potential of OneLake in addressing complex data challenges across diverse industries. By providing a unified, scalable, and governed data foundation, OneLake empowers organizations to break down silos, accelerate analytics, and drive innovation. In our final installment, we will delve into the best practices for organizing, securing, and optimizing your OneLake environment to maximize its value.

What specific data challenges are you facing in your organization, and how might a unified data lake like OneLake provide a solution? Share your thoughts in the comments below. Join us for the final part of our OneLake deep dive series.